Some think the world of email newsletters is a tired approach… that a newsletter is just an easy item to delete in your inbox. However, email campaigns are proving to be an increasingly effective and powerful tool to keep you in front of your network, especially in today’s digital age. The task that takes time is determining the best approach to attract readers and opens. Whether you’ve been sending email newsletters for years or are just embarking on this approach, a tool called A/B testing will allow you to determine what variation or element of your newsletter garners the most attention and best results.

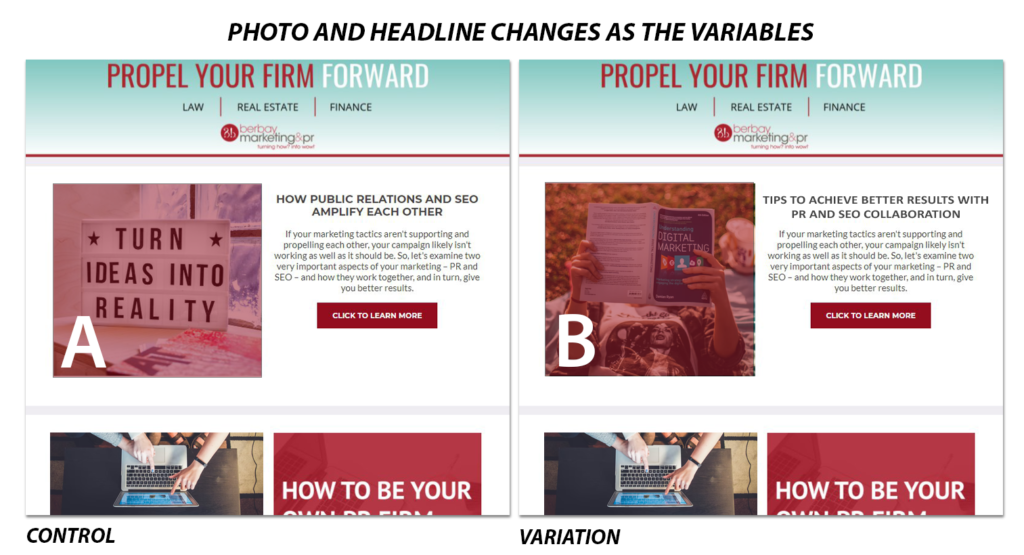

A/B testing, also called split testing, is the process of sending one variation of your primary email campaign to a subset of your database to determine if that variation yields better results. While A/B testing can get complex, we’ve outlined some very simple A/B testing tips you can try in your next newsletter.

Why should you try A/B testing, and how do you start?

A/B testing is a great way to prove statistically what elements in your email work best to drive opens and clicks. Don’t just rely on your gut feeling.

Step One: First, decide the goal you want to improve. For example, maybe your latest newsletters haven’t received as many opens or clicks as previous ones, or you’ve seen a steady decline in these numbers. Once you figure out the data you want to impact, you can compare the data you’ve been seeing with the data you generate through your A/B tests.

Step Two: Once you’ve identified your goal, choose one of the email versions you send to be the control scenario (with no edits), while the other email version has a single change applied. Be sure to make only one change between the two emails that are being tested so that you know for sure what made the impact on performance.

Step Three: Then, split your email contacts randomly into two groups containing the same number of people. Your goal is to have the smallest number of factors that could impact varying results, apart from the difference in the email versions.

What variables can you evaluate in your email campaigns with A/B testing?

- Send Time: Research the best times to send your email (based on industry best practice if such data is available online). This will help you to prioritize specific time slots that are worth targeting. Send your control-version email consistently at the same time and use the other email version to test different send times for comparison.

- Sender: Some people may not want to open emails associated with a name unfamiliar to them. Consider using a firm email address (which may look less suspicious to recipients) or use the head of the firm’s email address to see if this makes an impact on the email’s open rate.

- Subject Line: Subject lines are an important driver of email opening since they give the recipient a preview of the details inside. Get creative! You have one chance to catch someone’s attention, and it’s through your subject line. As you test more subject line versions, you’ll get a better idea of what your audience finds interesting and worthwhile enough to open.

- Content: Spend time looking at the design and layout of your email for potential improvements. Is there a graphic that could be moved? Should you add a specific section or call-to-action buttons, or make changes to section titles? The options are endless but remember that you will want to test each possible change one at a time through A/B testing.

By comparing the data, such as the open rate and click-through rate, you’ll be able to gain a better understanding of what readers find interesting and whether there are any trends in their activity. You may find that some changes make a bigger impact than others, but every little edit you try through A/B testing will ultimately help keep your emails fresh and relevant.

Need assistance with your email marketing? Contact Berbay at 310-405-7343 or info@berbay.com.